ScienceQA

Learn to Explain: Multimodal Reasoning via

Thought Chains for Science Question Answering

(NeurIPS 2022)

When answering a question, humans utilize the information available across different modalities to synthesize a consistent and complete chain of thought (CoT). This process is normally a black box in the case of deep learning models like large-scale language models. Recently, science question benchmarks have been used to diagnose the multi-hop reasoning ability and interpretability of an AI system. However, existing datasets fail to provide annotations for the answers, or are restricted to the textual-only modality, small scales, and limited domain diversity. To this end, we present Science Question Answering (ScienceQA), a new benchmark that consists of ~21k multimodal multiple choice questions with a diverse set of science topics and annotations of their answers with corresponding lectures and explanations. We further design language models to learn to generate lectures and explanations as the chain of thought (CoT) to mimic the multi-hop reasoning process when answering ScienceQA questions. ScienceQA demonstrates the utility of CoT in language models, as CoT improves the question answering performance by 1.20% in few-shot GPT-3 and 3.99% in fine-tuned UnifiedQA.

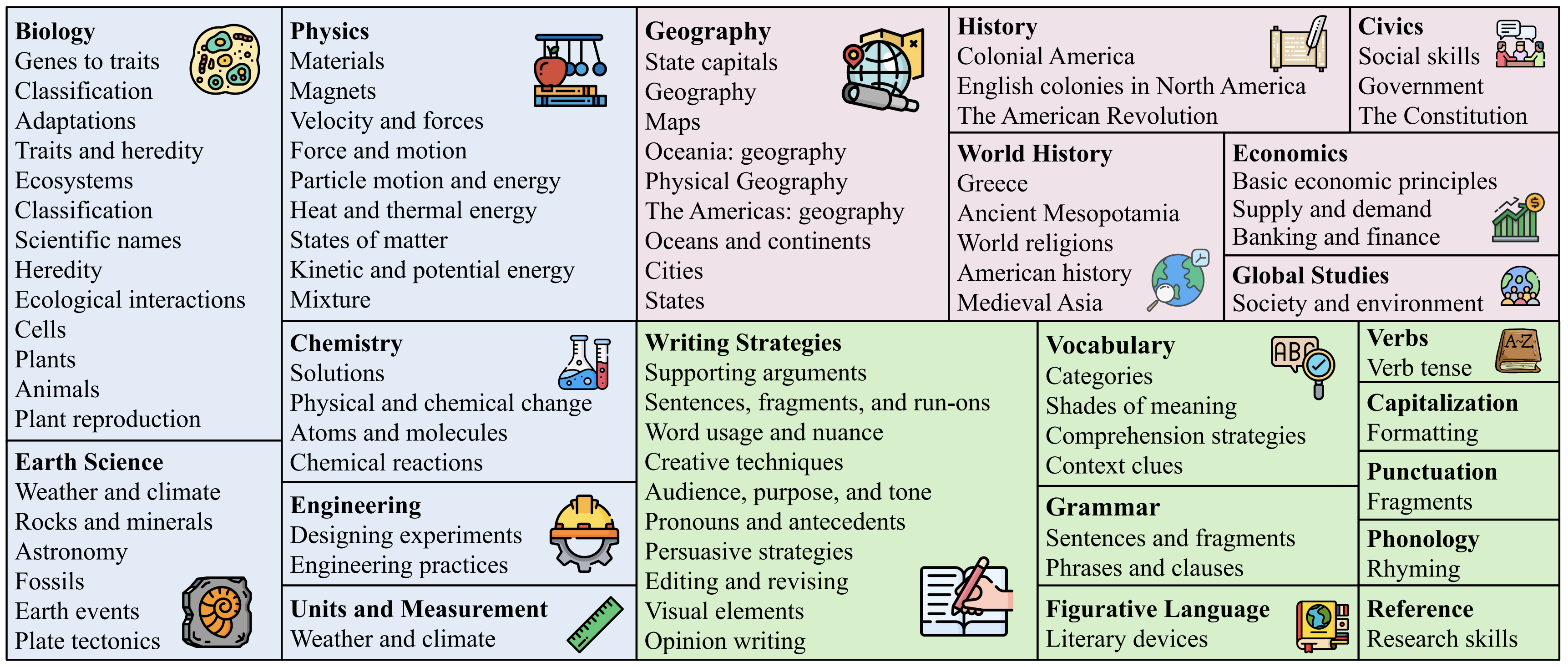

ScienceQA is collected from elementary and high school science curricula, and contains 21,208 multimodal multiple-choice science questions. Out of the questions in ScienceQA, 10,332 (48.7%) have an image context, 10,220 (48.2%) have a text context, and 6,532 (30.8%) have both. Most questions are annotated with grounded lectures (83.9%) and detailed explanations (90.5%). The lecture and explanation provide general external knowledge and specific reasons, respectively, for arriving at the correct answer. To the best of our knowledge, ScienceQA is the first large-scale multimodal dataset that annotates lectures and explanations for the answers.

ScienceQA, in contrast to previous datasets, has richer domain diversity from three subjects: natural science, language science, and social science. Questions in each subject are categorized first by the topic (Biology, Physics, Chemistry, etc.), then by the category (Plants, Cells, Animals, etc.), and finally by the skill (Classify fruits and vegetables as plant parts, Identify countries of Africa, etc.). ScienceQA features 26 topics, 127 categories, and 379 skills that cover a wide range of domains.

For more details, you can explore the datatset and check the visualizations here: Explore and Visualizations.

Our dataset is distributed under the CC BY-NC-SA (Attribution-NonCommercial-ShareAlike) license. You can download our dataset from ScienceQA (Google Drive), or check out our github repository.

💥 The ScienceQA dataset is now available at HuggingFace Datasets!

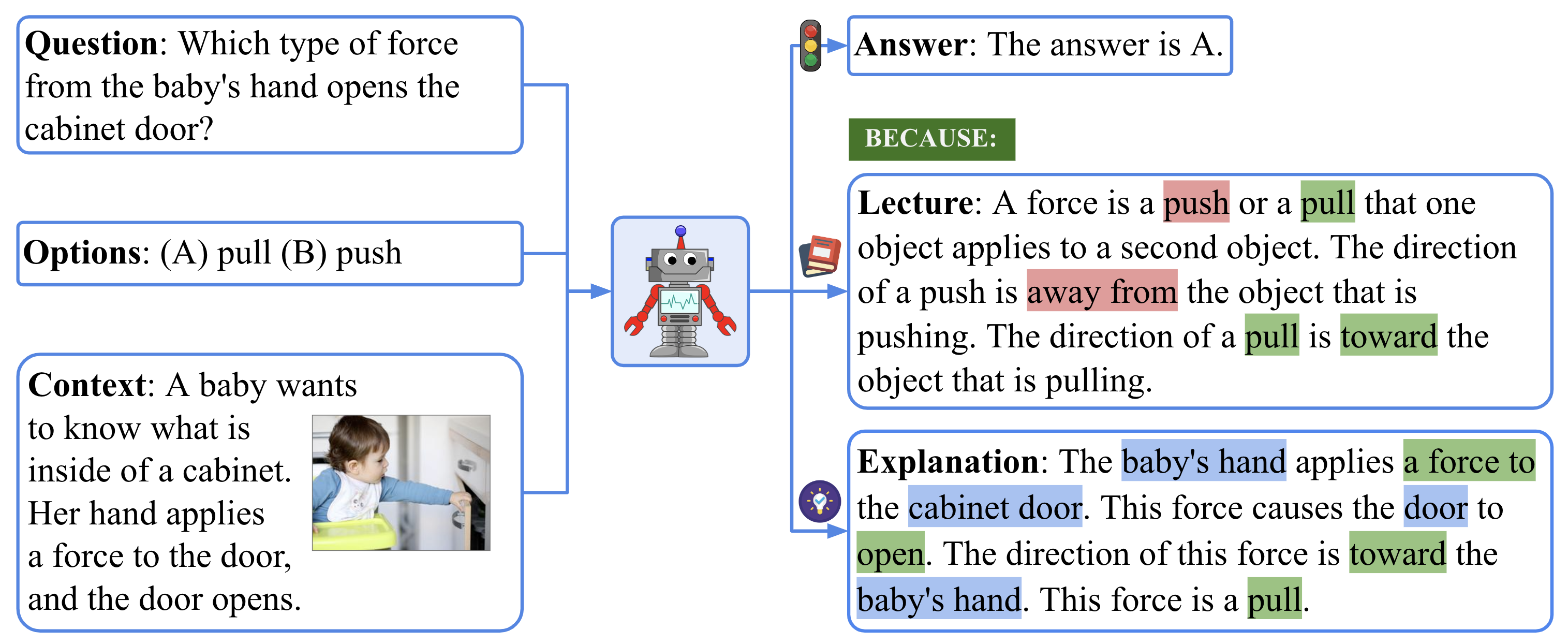

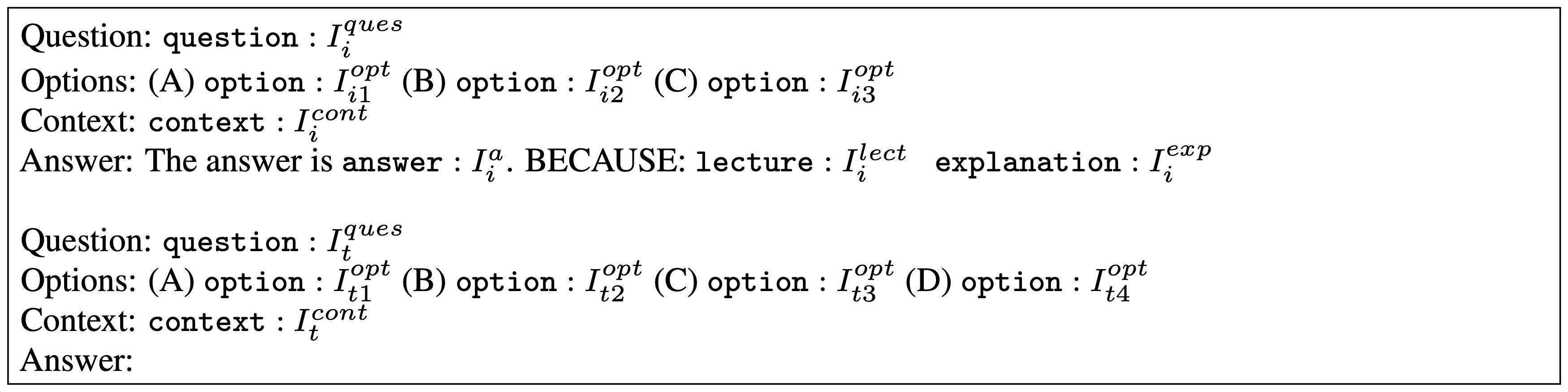

We build a few-shot GPT-3 model via chain-of-thought (CoT) prompting to generate the answer followed by the lecture and the explanations.

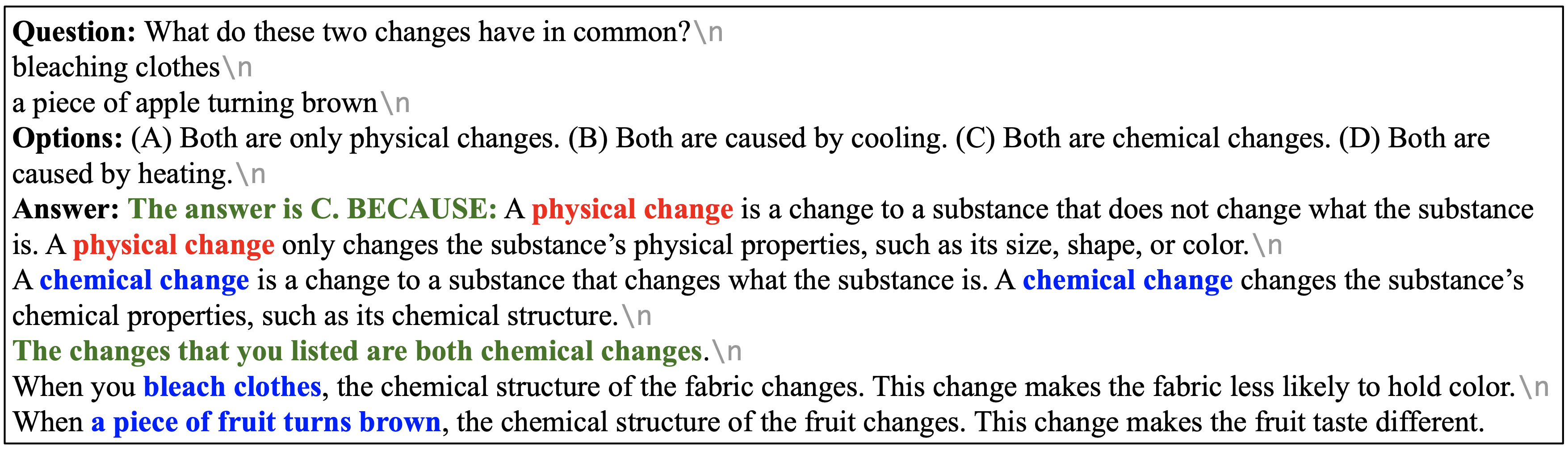

The few-shot GPT-3 model (CoT) achieves a state-of-the-art accuracy of 75.17% on ScienceQA. One prediction example is visualized bellow. We can see that GPT-3 (CoT) predicts the correct answer and generates a reasonable lecture and explanation to mimic the human thought process.

The results of other baselines and recent work are reported at the Leaderboard page.

Pan Lu, Swaroop Mishra, Tony Xia, Liang Qiu, Kai-Wei Chang, Song-Chun Zhu, Oyvind Tafjord, Peter

Clark,

Ashwin Kalyan

The 36th Conference on Neural Information Processing Systems (NeurIPS), 2022

Paper /

PDF /

Code

View on the github repository.

If the paper, codes, or the dataset inspire you, please cite us:

@inproceedings{lu2022learn,

title={Learn to Explain: Multimodal Reasoning via Thought Chains for Science Question Answering},

author={Lu, Pan and Mishra, Swaroop and Xia, Tony and Qiu, Liang and Chang, Kai-Wei and Zhu, Song-Chun and Tafjord, Oyvind and Clark, Peter and Ashwin Kalyan},

booktitle={The 36th Conference on Neural Information Processing Systems (NeurIPS)},

year={2022}

}

1University of California, Los Angeles 2Arizona State University 3Allen Institute for AI

Questions about ScienceQA, or want to get in touch? Contact Pan Lu at the contact page, or open up an issue on Github.